When large research facilities such as the X-ray free-electron laser SwissFEL and the Swiss Light Source SLS are operating at full speed, they produce enormous amounts of data. To interpret the data and use it, for example, to develop new drugs or materials, PSI is now concentrating its expertise in a new research division: Scientific Computing, Theory, and Data.

Alun Ashton’s research career began in the 1990s, virtually in the Stone Age with regard to the use of computers. “As a student, I saved the data from my measurements on floppy disks,” the biochemist and computer scientist recalls. For anyone who doesn’t know what he’s referring to: floppy disks were interchangeable magnetic storage media that could hold, in their most modern version, a then-phenomenal 1.4 megabytes. “If I had to store the data that is generated today in just one experiment at the Swiss Light Source SLS on such floppies, I’d need millions of them – and several lifetimes to change the floppy disks.”

Luckily, information technology has advanced so rapidly that Ashton is able to use his time for more meaningful things. Even large amounts of data from the experiments at PSI are processed and stored quickly enough. So far, at least. By 2025 at the latest, when SLS 2.0 starts operating after an SLS upgrade, the researchers at PSI will face a major problem. After the upgrade to SLS 2.0, experiments can have up to a thousand times higher performance than with today’s SLS and other configurations, and can therefore produce much more data than before. In addition, better and faster detectors with higher resolution are coming. Where today’s SLS beamline generates one data set per minute, with SLS 2.0 it will generate such amounts of data in less than a second. And that is by no means the end of this rapid development. At SwissFEL, the brand new Jungfrau detector at its full speed could actually generate 50 gigabytes per second; a conventional PC hard drive would be full to the brim after just a few seconds. Overall, the experiments at PSI are currently yielding 3.6 petabytes per year. When SLS 2.0 is fully operational, the experiments there alone could generate up to 30 petabytes per year, which would require around 50,000 PC hard drives. If, for some reason, you want to convert this to floppy disks, add six more zeros.

Wanted: fresh ideas

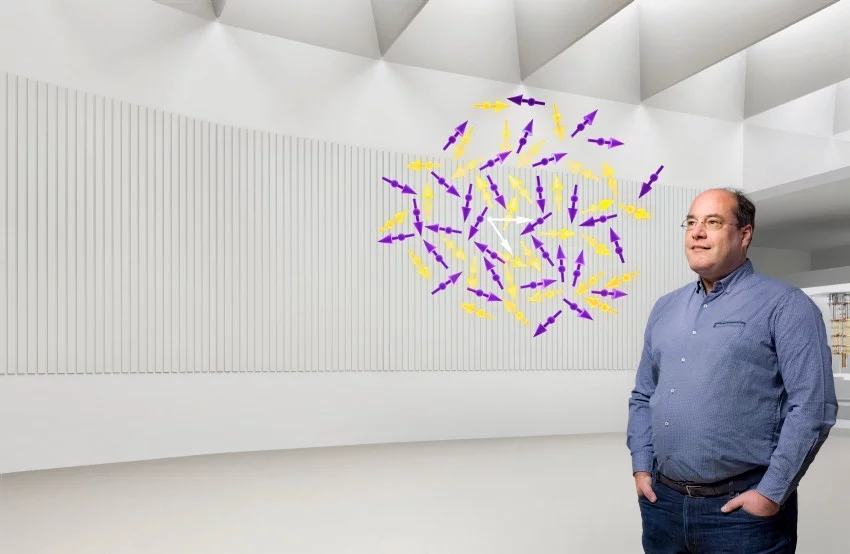

It has been clear for years: the new challenges at PSI cannot be mastered with old concepts. Fresh ideas are needed on how to master the huge amounts of data in order to answer the increasingly demanding and numerous research questions. And this calls for a new dedicated research focus, with a corresponding organisational structure. The result is the new Scientific Computing, Theory, and Data Division, or SCD for short, which was established in July 2021. The SCD links existing units such as the Laboratory for Simulation and Modelling, whose interim manager is Andreas Adelmann, and new units such as the third site of the Swiss Data Science Centre at PSI, which complements the two locations at EPFL and ETHZ. Around seventy people in four departments are already conducting research and development and providing support; soon there will be a hundred. While the three laboratory heads Andreas Adelmann, Andreas Läuchli and Nicola Marzari are primarily concerned with scientific methods in their respective disciplines, Alun Ashton, with the Scientific IT Infrastructure and Services Department, leads a service unit that provides professional support to scientists at the research division Photon Science, SCD and throughout PSI.

“The research departments should conduct research and should not maintain their own IT departments,” Ashton says. That is why centralisation in the SCD is the right step. “We’re not reinventing the wheel, but nevertheless with the SCD we have a unique selling point,” agrees Andreas Adelmann. The new research division should be better able to take advantage of synergies, distinguish itself in the international scientific community, and attract good people. Adelmann: “The SCD is more than the sum of its parts.”

One of his most interesting “customers” is Marco Stampanoni, says Alun Ashton with a wink. The ETH Zurich professor’s team has dedicated itself to tomographic X-ray microscopy, which places extremely high demands on computing power and storage. For example, to investigate how a warm gas penetrates a metallic liquid foam during the synthesis of a new alloy, the software has to calculate a three-dimensional snapshot from the data every millisecond. These are huge amounts of data that must be generated and processed. Other colleagues in the same lab are working on computational microscopy and, in particular, ptychography. This enhances conventional X-ray microscopy, which works with lenses but does not reach the fine resolution that would actually be possible with X-rays. In ptychography, an iterative algorithm reconstructs the X-ray image from the raw data of the detector, which is placed far away from the sample, without a lens in-between, and fully leverages on the coherent properties of a synchrotron source. The underlying mathematical operation is computationally very demanding and must be performed thousands of times. With SLS 2.0, the demands on such computing power will increase significantly, and this will make the use of the supercomputer at the Swiss National Supercomputing Centre in Lugano indispensable.

You can‘t rely on Moore‘s law

And the performance gap is likely to widen further, because the researchers at PSI and in many other scientific disciplines can no longer rely on Moore’s law. Intel co-founder Gordon Moore predicted in 1965 that the number of transistors, which roughly corresponds to computing power, would double every 18 months – some sources quote a figure of 12 or 24 months. Moore’s Law still applies today and will probably continue to do so during this decade. Unfortunately that’s not enough. “The brilliance of sources such as SwissFEL or SLS 2.0 is increasing at a faster pace than Moore’s law,” warns Marco Stampanoni. “This requires smarter solutions rather than just more and more computing power.”

One might be machine learning “It’s a truism: there’s much more in our data than we have been able to utilise up to now,” says Andreas Adelmann. Machine learning might find this hidden knowledge amid the huge mountains of data. And it can help save expensive beamtime at SLS and SwissFEL. In the past, the scientists took the data home after completing their measurements to analyse at their leisure. But experiments can also go wrong, and this is often not noticed until months later. Fast models based on machine learning can provide an indication of whether or not the measured values are plausible while an experiment is still running. If not, the experimenters have time to adjust their measuring equipment. Adelmann: “The data collection in experiments and the data analysis are moving closer together.”

Here Marco Stampanoni sees the SCD as an important partner. Several users and researchers working at PSI have nothing to do with IT and can be overwhelmed by it. “Physicians do not need to know how a synchrotron works or how and where exactly the data is stored.” If they are interested in the effect of a drug on the stability of bones, they do not want to have to work through the ten terabytes of data that a tomographic experiment at the synchrotron has delivered. All they need is a simple graphic from which they can read off the most important results. “In the future,” Stampanoni hopes, “the SCD will make a contribution here so that users can interrogate their data questions and generate scientific impact in a reasonable amount of time.”

Taking advantage of synergies

Xavier Deupi has no doubt that this will succeed. For the scientist in the Condensed Matter Theory research group, setting up the SCD was inevitable. “PSI needed to consolidate scientific computing in one organisational unit in order to be able to take advantage of synergies.” Now that the data scientists are in the same department, they can answer questions from Deupi’s team more quickly and start joint projects. “From their IT know-how and our knowledge of biology, new tools for research on proteins are being created.”

Deupi describes himself as a “heavy user” of the high-performance Merlin computer at PSI and the supercomputer in Lugano. For one experiment, he uses hundreds of processors that run for hundreds of hours, sometimes even several months. But that’s still not enough. Despite the long computing time, Deupi can only simulate changes in proteins that last a few microseconds. But when a molecule binds to a protein – for example adrenaline to receptors in heart cells – it takes at least milliseconds. Around one-third of all drugs bind to the proteins that Deupi studies. If it were possible to watch the entire process as if in a three-dimensional video, that would be a breakthrough for the development of such drugs. But even the most powerful computers are not yet able to do this.

So why make it so complicated when it can also be done simply? Many have been asking this question since Google introduced AlphaFold, a software that uses artificial intelligence to build models of such proteins much more rapidly and accurately. You just enter the sequence and AlphaFold spits out the structure. “AlphaFold is extremely good,” praises Deupi. Yet the end of structural biology that some are already prophesying is not in sight. And he’s not worried about his job either. Because, firstly, the Google algorithm does not predict the entire structure of a protein and, secondly, you cannot simply infer the function of the protein from the structure. “AlphaFold makes no statements about how such proteins move.” This is precisely why large research facilities such as SLS and SwissFEL are still needed. “AlphaFold doesn’t replace these machines. Rather, they complement each other.”

Seeing the transformation through

The SCD is exactly the right place to test such new tools. To do this, experimenters, theoreticians, computer experts, engineers, and many more have to talk to each other. This is necessary so that computer scientists can find the right solutions for them, according to Marie Yao. She was hired at the SCD specifically to overcome the Babylonian confusion of languages and see the transformation through to ensure the best possible scientific results. If she worked in a company, she would call herself a manager for strategic alliances. “Change isn’t always easy,” says Yao, who worked for several years in a similar position at Oak Ridge National Laboratory in the USA. Some employees might cling to old processes out of fear that they will lose their value. She sees her role as promoting teamwork and creating an environment in which everyone feels secure and appreciated to contribute to better technical solutions.

Within Alun Ashton’s team at the interface between the SCD and other PSI divisions, Yao is coordinating development for the start of SLS 2.0 in 2025 and is also contributing to the development of technical solutions. By then, hardware, software, and networks must be ready and able to cope with the enormous amounts of data. A holistic approach is important, according to Yao: “The whole data pipeline is only as strong as its weakest link.”

An increasingly weak link in science, as in other areas of the economy, is the shortage of skilled workers. If there are no suitable experts, they have to be trained, often in interdisciplinary areas, says Yao. “Society gives us well-trained professionals, so we should give something back to society – one of the ways is by getting engaging with the education of the next generation.”

Tomorrow’s researchers have a lot of work ahead of them. Software for solving scientific problems is often 20 years old and in some cases is not efficient enough. Overcoming the deficits with even more computing power no longer works today. Scientific software must be made fit for the rapidly growing amounts of data and for trends in high-performance computing, such as the use of graphics processing units instead of conventional central processing units. “The SCD can help to attract people who can do just that,” Marie Yao believes.

Machine and modelling

That has already succeeded in the case of Andreas Läuchli. He, along with Andreas Adelmann and Nicola Marzari, is head of the third scientific laboratory at the SCD, which deals with theoretical and computational physics. A year ago he came from Innsbruck to PSI and EPF Lausanne, where he also holds an academic chair. Läuchli is expected to strengthen the theory but also work hand in hand with the experimental physicists and give them ideas for new experiments, especially at SwissFEL and at SLS 2.0. Läuchli considers establishing the SCD a good decision. “Experiments and theory are becoming more and more complex. So whoever wants to do research and publish successfully needs a good machine and good modelling.” He views the SCD as an important component of this synthesis.

Läuchli’s hobbyhorse is many-particle systems, which in physics includes everything that is more than a single hydrogen atom – in other words, almost all matter. All paths towards determining energy levels in these systems pass through the Schrödinger equation. It provides exact results for the hydrogen atom, but the computational effort increases exponentially for many-particle systems. For this reason, researchers resort to approximations even with just a few atoms. Yet it is not always certain that the approximations are close enough to reality.

Then Läuchli brings out the crowbar. “Brute force” is the term for the method he uses to subdue the Schrödinger equation for up to 50 particles, with raw computing power. Then 20,000 processor cores with several terabytes of RAM can work together on such a problem simultaneously, for several weeks. Even the supercomputer in Lugano is then temporarily off-limits to other users. Läuchli: “Sometimes the brute force method is important to test whether or not our approximations are really valid.”

Whoever wants to do research and publish successfully needs a good machine and good modelling.

Naturally, not every work group can use the computer spontaneously. Each team needs to submit an application of up to 20 pages to the SNSC in Lugano, which is evaluated according to the scientific knowledge to be gained and the efficiency of the algorithms. The use of the computer cluster Merlin at PSI is less bureaucratic. However, anyone who has consumed a lot of computing time there will be downgraded and will have to get in line again, farther back in the queue.

Andreas Läuchli has great expectations for the Quantum Computing Hub (QCH), which was established at PSI in 2021. “Perhaps in the future we will be able to map physics problems that cannot be calculated on classical computers onto quantum computers.” The Hub would also benefit from this cooperation because quantum computers are based on the physical principles that Läuchli and his team are investigating. Andreas Läuchli: “SCD and QCH working together – I see great potential there.”

Text: Bernd Müller

© PSI provides image and/or video material free of charge for media coverage of the content of the above text. Use of this material for other purposes is not permitted. This also includes the transfer of the image and video material into databases as well as sale by third parties.